‘AI must be aligned with right values from the start’: CTGT’s Cyril Gorlla | Technology News

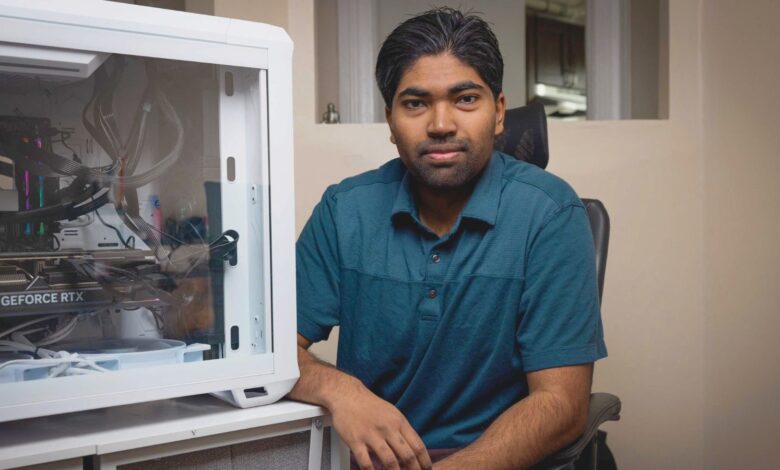

“AI will deeply integrate into governance, society, and business, and we must embed the right values from the start,” said 23-year-old Cyril Gorlla, co-founder and CEO of CTGT, a startup that is enabling enterprises to deploy trustworthy AI that adapts to their needs in real time. Explaining the biggest misconceptions around removing bias and censorship from AI models, Gorlla asked to imagine a scenario where a policymaker or a government body is making decisions based on biased AI outputs. He asserted that people often underestimate the long-term impact of bias in AI models.“The downstream effects, years later, could disproportionately harm communities. We’re still in early days, like the internet in the ‘90s. AI will deeply integrate into governance, society, and business, and we must embed the right values from the start,” Gorlla told .

CTGT recently raised $7.2 million in an oversubscribed seed round. The funding was led Gradient, Google’s early-stage AI fund, with support from General Catalyst, Y Combinator, Liquid 2, Deepwater, and other well-known angel investors. Gorlla co-founded CTGT along with Trevor Tuttle who is also serving as the CTO of the company.

Story continues below this ad

Earlier this year, Chinese AI startup DeepSeek created ripples around the world after it introduced its flagship AI model DeepSeek-R1 that was said to be made at a fraction of the cost invested in top-of-the-line AI models the likes of OpenAI, Microsoft, Google, etc. However, other than its cost-efficiency, DeepSeek-R1 was also in the limelight for evident bias. CTGT, led Gorlla, developed a mathematical method to remove censorship and bias at the model level.

CTGT isolated and modified the internal model features that are responsible for filtering bias. The company claimed that this approach eliminates the need for backpropagation and allows models to be trained, customised, and developed 500 times faster. With this method, the company was able to instantly identify the model’s features that were causing bias and censorship, isolate them, and then modify them. During testing, CTGT was able to mitigate bias and censorship in DeepSeek R1 100 per cent of the time. According to the company, this method can be applied to any open-weight model to remove bias.

Removing bias and censorship

On being asked how they managed to strip away censorship and bias from DeepSeek model, he said, “When DeepSeek launched, it raised national security concerns due to its bias. We decided to show publicly what our platform could already do. We analysed which neurones fired during sensitive queries like Tiananmen Square. The model had the knowledge but suppressed it. We identified and reduced the influence of those censorship features, allowing the model to respond freely without retraining.”

Since the company claimed 100 per cent success in removing bias and censorship, we asked how they were able to test and verify that. Gorlla responded saying that at CTGT, they used a proprietary dataset of prompts that would usually be censored DeepSeek. “Originally, DeepSeek only answered about 32% of those prompts. After our intervention, it answered nearly all of them. The queries ranged from politically sensitive topics to general biased outputs. We verified the improvement comparing refusal rates and answer completeness before and after intervention.”Story continues below this ad

Talking about the response of the AI community and investors to their methodology, Gorlla said that their paper, tweet, and his talk in Washington gained over a million views. “I think it resonated because it showed a new path, understanding models from first principles, not just scaling. Our work enables intelligent, personalised AI without expensive fine-tuning, making it more accessible and aligned with democratic values.”

Hallucinations and concerns about AI

When it comes to AI models, hallucinations are when a model produces outputs that are factually incorrect, misleading, or sometimes even nonsensical. Interestingly, these outputs may at first appear convincing. When asked how their method addressed the issue of hallucinations, Gorlla said that most hallucination prevention measures today involve prompt engineering, which ironically reduces model performance. “Our approach identifies specific features responsible for hallucinations, like in the infamous Google model that said to use glue on pizza. We mathematically identify and reduce the influence of incorrect features without degrading overall performance,” Gorlla explained.

On a similar tangent, Gorlla also spoke about the concerns about AI in high-stakes domains like healthcare and finance. According to the HDSI UC San Diego alumnus, GTCT’s platform grounds its models in reliable sources, allowing precise control. “For example, a cybersecurity client feeds in internal documents, and we isolate the relevant features. In healthcare, we help improve bedside manner and factual responses. These aren’t math benchmarks, they’re nuanced human interactions, and we allow customers to embed their values directly.”

Is AI a serious threat or plain misunderstanding?

During the conversation, Gorlla also shared his views on the rapid pace of AI developments and his fears and hopes. About the explosion of OpenAI’s advanced image generator and the state of AI-generated art and copyright, Gorlla said that he viewed it as a democratisation of creativity. “Think of the car replacing horse-drawn carriages, it was controversial too. AI lets people express ideas who otherwise wouldn’t have picked up a pencil. The constraint now isn’t technical skill but creativity and ideation. That’s a powerful shift,” he said.Story continues below this ad

On being asked if AI was a serious threat to jobs or if it was misunderstood, Gorlla said, “It’s nuanced.” According to him, even if AI outperformed humans, people would res being replaced. He firmly believes that in specialised fields such as law and healthcare, humans will stay longer. However, in replaceable roles like copywriting or marketing, AI will disrupt. “It’s not about replacement, but amplification. Those who use AI can 10x or 100x their output. Those who don’t may get left behind.”

When asked what future he envisioned with AI and what worries him the most, Gorlla said his biggest concern has been the blind push for scale, which he described as “just making bigger black boxes.” Gorlla advocated for ‘principled and reasoned’ approaches where models are understandable, values-aligned, and personalised. “That’s the future we’re building: safe, trustworthy AI that reflects the individual, not the corporation or state.”

On the ongoing AI arms race between China and the US, following the DeepSeek impetus, Gorilla emphasised that the US need not win scale but values. The young entrepreneur and innovator feels that the US should lean toward openness, diversity of thought, and safe deployment. “That’s what I told the White House and Congress: victory lies in principled, trustworthy AI that puts control in the user’s hands.”